NANOLM: a 80 lines of code (nano) Language Model builder

Here you may find a lightweight tutorial with examples on how language models Large Language Models such as ChatGPT work in a very very simplified setting, accessible to K12 kids taking the first steps in programming and computational thinking.

It is based on a tiny 80 lines Python program that, after training with some text content, uses the context of just the last two words to predict the next word to generate text on the fly.

Large Language Models such as ChatGPT and Gemini are all the rage these days ! Although LLMs are based on sophisticated machine learning techniques behind generative artificial intelligence tools like ChatGPT, at the very bottom they rely on statistical prediction of what is the most likely word (or token) to follow a prompt. NanoLM uses instead a very elementary statistical model called a Markov Chain. It is something really simple, as I explain the my slides (see below).

The slides and code are based on a lecture at my Programming Fundamentals course for freshers at Instituto Superior Técnico Informática no Técnico and inspired on a exercise in “The Practice of Programming” old textbook by Kernighan & Pike.

The generated text is of course fairly messy in general, but very often it also makes sense, and may even be fun 🙂 ! You may try to further develop NanoLM, it is just an embryo, that can be improved it in many ways.

The generated text only by chance appears in the original training set, as it is produced at random based on a coarse probability distribution “learned” from the training data.

The Python code also types the text out like NanoLM is “thinking” at the keyboard 🙂

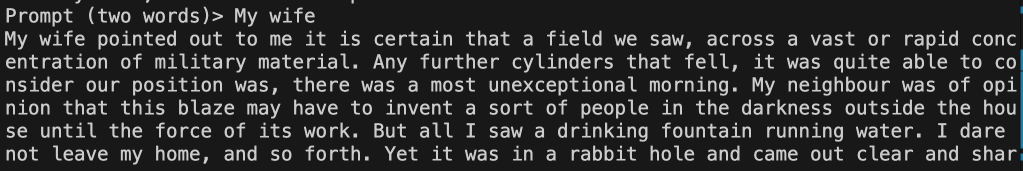

Example trained with “The War of the Worlds” (H. G. Wells).

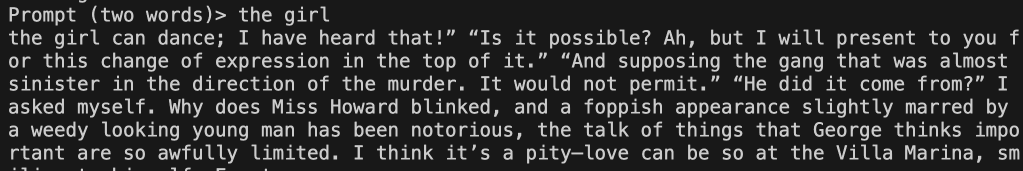

Example trained with some novels by Agatha Christie.

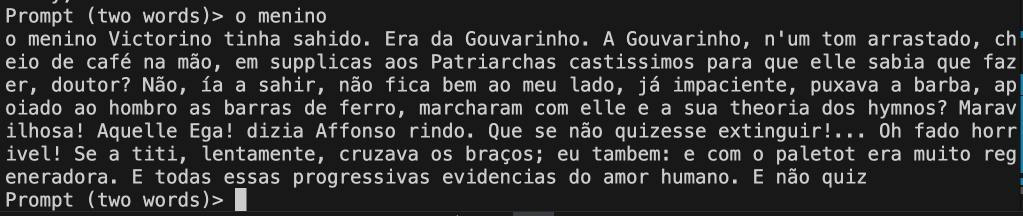

Example trained with “Os Maias” + “A Relíquia” (Eça de Queiroz).

The idea is based on an programming exercise in the famous old book “The Practice of Programming” by Brian Kernighan & Rob Pike, 1999, which I have adapted to the spirit of LLM chatbot (I am grateful to Philip Wadler to reminding me of this :-). It consists of a 80 line Python program, that and some sample training corpus (retrieved, with due credit, from Project Gutenberg). The code reads some training data, and completes two word prompts, as in the screen shots above.

Presentation Slides – here you may find the presentation slides (Google Slides). They were initially conceived for a 45m lecture for 1st year Programming Fundamentals course at Técnico.

Software – here you may find a link to the software (GitHub). It contains the nanolm.py code and a set of text files containing books by Dickens, Agatha Christie, H. G. Wells, Shakespeare, the Bible, Suetonius, Eça de Queiroz and Luis de Camões. You may adapt and play with code. It is based on a very simple markov chain model that just uses the context of the last two words to predict the next word.

Acknowledgements: Pedro Resende, João Ferreira, Ana Sokolova, Philip Wadler.